AI is fun. It’s currently fast-moving with the AI Winter currently a long way away – although it will likely come, as comes to all trendy sexy technical topics. But whilst it’s hot – let’s ride the wave! If you just want to dive in, and want the tl;dr, click here.

I have been playing around with these tools, and man oh man – this is fun.

In this post, I will show you how to:

- Get a variety of offline Large Language Models up and running on your PC.

- Introduce you to the concept of censored and uncensored LLMs.

- Show you how to connect to your offline LLM through code, and start using LLMs in your programming.

One step at a time…

*breathe* Seriously, I am so excited about this post.

Okay – so… some background. Large Language Models (LLM) are the very cool tech at the heart of the current wave of AI. ChatGPT is simply a brand, wrapped around the GPT LLM, currently running at version 5. So far, so good.

If you want to see version 2 running inside Excel, entirely offline, you can do that here. And it’s worth doing, as it can give you a really good way of looking ‘inside’ an LLM. Poke around. Figure out how it works. Learn the terms, get a feel for it.

Why offline is a ‘big deal’

People are being conditioned to think of LLMs and AI as ‘something that happens on someone else’s computer’. Microsoft want you to send your data to them, and for you to pay for a subscription, so that you can use CoPilot Pro. As does OpenAI with ChatGPT. As does Google with Gemini.

Your data is valuable. It is important.

- Everything you share with ChatGPT, you share with OpenAI.

- Everything you share with CoPilot, you share with Microsoft AND OpenAI.

- Everything you share with Gemini, you share with Google.

If you decide to use AI to explain what your MRI means, or get it to explain how to improve your code, or get it explain how to improve your essay, you are sharing that data. How they use this data is agreed through their privacy policies and data-usage policies. Your implied acceptance of their terms comes from using their service. Your explicit acceptance of their terms comes from clicking Okay to whatever agreement they push in front of you.

You don’t have to be the product. In 1973, Richard Serra suggested that “Television Delivers People”, suggesting that if something is free, you – the consumer – are the product.

Now consider that The Internet Delivers People…

An offline LLM does not share your data. It keeps your data on your PC.

Anyway! Back to AI and LLMs…

Let’s go deeper…

Let’s have a look at some of the LLMs roaming in the wild. Meta (Facebook) have created LLlaMa. There are some really good conversations around whether LLaMa is Open Source or not (it’s not). This is a common issue with LLMs, and a whole grey area of its own; are they commercial, are they open source, was the dataset commercial, or open source? If a model uses a dataset to train against that contains copyrighted material, where do we stand? These are questions for another day…

Vicuna is based on LLaMa, that has then been fine-tuned using user-shared conversations from ShareGPT.

There are LLMs for specific purposes (trained on specific datasets), such as StarChat-Alpha (for writing code),

How do we judge an LLM?

It produces data. We can analyse data, and produce metrics. This allows for services like HuggingFace’s Opem LLM Leaderboard that can give a detailed breakdown of current models. Obviously, to derive value from the metrics, you need to understand what they mean:

HuggingFace Metrics Explained

“We evaluate models on 6 key benchmarks using the Eleuther AI Language Model Evaluation Harness , a unified framework to test generative language models on a large number of different evaluation tasks.

- AI2 Reasoning Challenge (25-shot) – a set of grade-school science questions.

- HellaSwag (10-shot) – a test of commonsense inference, which is easy for humans (~95%) but challenging for SOTA models.

- MMLU (5-shot) – a test to measure a text model’s multitask accuracy. The test covers 57 tasks including elementary mathematics, US history, computer science, law, and more.

- TruthfulQA (0-shot) – a test to measure a model’s propensity to reproduce falsehoods commonly found online. Note: TruthfulQA is technically a 6-shot task in the Harness because each example is prepended with 6 Q/A pairs, even in the 0-shot setting.

- Winogrande (5-shot) – an adversarial and difficult Winograd benchmark at scale, for commonsense reasoning.

- GSM8k (5-shot) – diverse grade school math word problems to measure a model’s ability to solve multi-step mathematical reasoning problems.

For all these evaluations, a higher score is a better score. We chose these benchmarks as they test a variety of reasoning and general knowledge across a wide variety of fields in 0-shot and few-shot settings.”

Censored vs uncensored models…

This is an important question for you to consider.

Most LLMs you will be familiar with, are censored models. If you ask ChatGPT an awkward question, it will give you a prompt explaining that it can’t answer you.

Please, consider giving Eric Hartford’s “Uncensored Models” a read, and then pop back.

LET ME PLAY WITH AI DAMNIT

Okay, okay!

Pop over to ollama.com and download the tool. This is a wonderful little tool, that makes playing with LLMs as simple as “Download that one, try it. Next.”

Here you see me running the previously-download llama2, asking it a question, ending the session, before downloading wizard-vicuna-uncensored, running it, and asking it the same question.

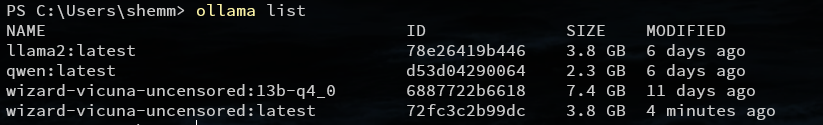

Why did it download wizard-vicuna-uncensored, when list said it was already installed?

Good question! By running “wizard-vicuna-uncensored”, it downloaded the latest version of the model. If I’d specified “wizard-vicuna-uncensored:13b-q4_0” it would have used the existing model.

So… now what can I do?

Well, you can experiment with all the models available to ollama! Some caveats to be aware of. Keep an eye out for the “quantized” value of your model. It relates to the size of the neural network that powers the LLM. This often boils down to a question of “capabilities and accuracy” vs hardware requirements. This isn’t always true, and there is often a sweet spot to be found, between hardware requirements, and acceptable capabilities and accuracy, with diminishing returns for higher values. It also doesn’t take into account the value of fine-tuning a model.

- Running LLMs need ‘beefy’ PC specifications.

- My record RAM usage so far has been 31.5 GB on a 32GB PC (running llama2_70b_chat_uncensored_GGML).

- The models themselves are ‘big’ – remember to ‘ollama rm’ models you no longer need!

- If your PC is begging for mercy, as you’re using too much RAM, use a version of a model that has lower requirements. HuggingFace helpfully has a column showing Max RAM required, and “Use case” outlining the potential trade-off.

And what next?

Well.. how about using it from Python.

# Import the ollama library - for our LLM.

import ollama

# Import playsound - allows us to play mp3s from our code.

import playsound

# Import gTTS - Google text-to-speech. Creates an mp3, when we send it a string.

from gtts import gTTS

# Our magic code - use model llama2, to respond to what I have put in 'content'.

response = ollama.chat(model='llama2', messages=[

{

'role': 'user',

'content': 'How big is the universe??',

'stream': False,

},

])

# Print the response from llama2.

print(response['message']['content'])

# Send the response from llama2 to Google TTS.

mp3 = gTTS(text=response['message']['content'], lang='en', slow=False)

# Save the response from Google TTS as an mp3

mp3.save('test.mp3')

# Play the mp3

playsound.playsound('test.mp3')Results in…

If you want to play with ollama in different programming languages, a full selection is available here:

https://github.com/ollama/ollama

YOU RAVING HYPOCRITE, YOU’RE USING GOOGLE TEXT TO SPEECH!

Fair. I can’t even begin to defend that point. I am using a Google Text To Speech here, to quickly and easily generate an mp3 of the spoken version of the LLMs response. I ‘should’ be using an offline TTS, and I hope, in a later article, to do exactly that. As it stands, all of that above about not sharing my data with those ‘other’ businesses goes out the window – as the library gTTS sends my LLMs response straight to Google. They take the string, and provide me with an mp3 using their text-to-speech engine.

I will do better.

Summary / tl;dr

If you want to play with offline large language models, download ollama from ollama.com, type in a couple of commands into Windows Terminal from the video above, and off you go! If you want to use an offline large language model in your own programming, use some of the cool libraries I’ve linked to, and you can have a functioning program in about 10 lines of Python.

Seriously, when I said this was cool – I wasn’t kidding. This is stuff I dreamt of as a kid.

Please – take away the thoughts of “The Internet Delivers People”, and its relevance to our use of ChatGPT / CoPilot / Gemini. Consider the importance of supporting and developing offline LLMs, and give careful consideration to the merits of censored and uncensored LLMs.